A few months ago, my life had finally settled into a comfortable routine. The initial stress of a new job, apartment hunting in NYC, and moving to an unfamiliar city had settled. What remained was a cycle of taking the same commute to work, talking to the same people, and eating the same foods. My turbulent takeoff into a new life had succeeded, and I was cruising at 35,000 feet—autopilot engaged.

Living on autopilot is both a luxury and a curse. Having a solid routine means I’ve tackled my greatest challenges, letting me live a relatively peaceful existence above the stormy clouds below. It equips me with a flight plan that guides me to some ultimate endpoint. The person who set that destination isn’t me, though. It is a more naive version of me, whose goals and aspirations inevitably differ from what they are now.

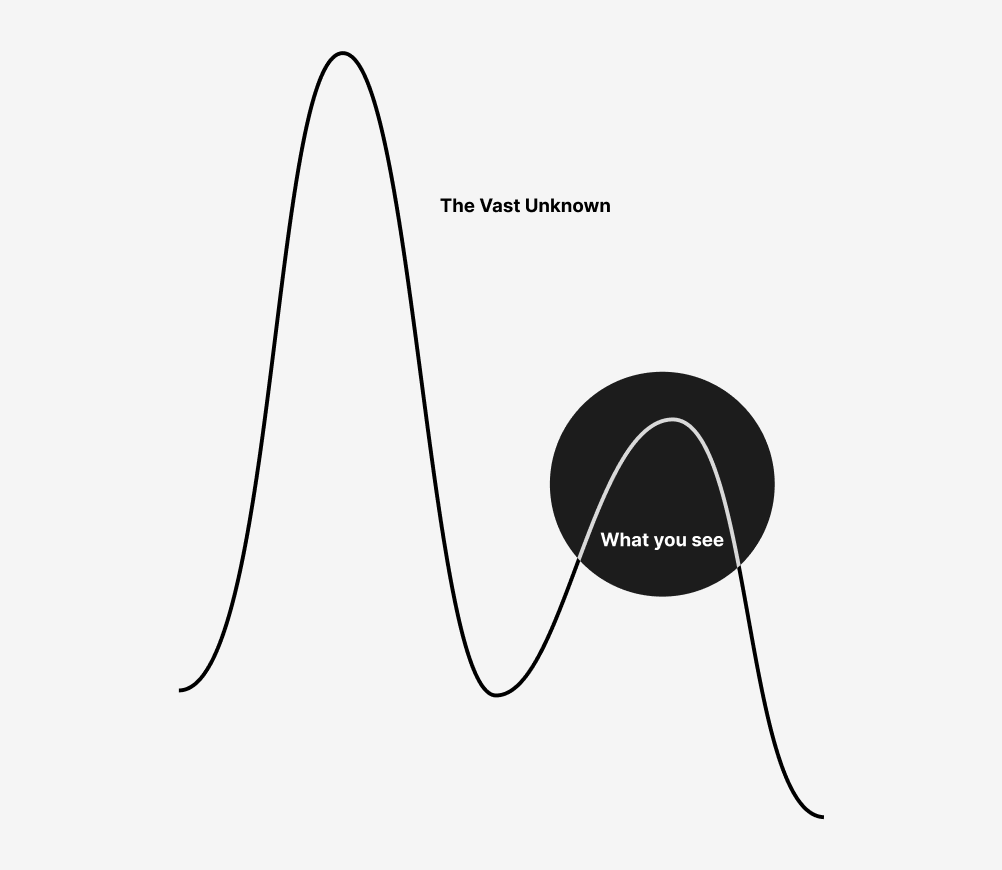

In optimization terms, I was stuck in a local optimum—a solution that seems good in the moment, but may not be the best overall outcome. Fortunately for me, there's a field riddled with local optima in unknown environments: reinforcement learning (RL). Reinforcement learning is a kind of AI where the answers aren’t presented to the model from the start. Instead, we give it an environment and the occasional thumbs up or thumbs down (the “reward function”), telling it how well it’s doing. The model interacts with its surroundings to find what series of actions will yield the highest reward. Once it finds an action sequence that seems to work, it must retrace its steps and find a strategy for consistently replicating those results. It’s not only figuring out how to learn (like most machine learning models), but also what to learn in the first place.

Sound familiar?

It’s no coincidence that training an RL model feels more like raising a child than any other form of machine learning1. The model starts as a baby with an infinitely malleable brain. Knowing nothing about the world, it tries anything it can but often fails to make much meaningful progress. After some crude learning, it proudly presents you with its first solution.

Thrilling! But the score in the bottom left tells a different story. We reward the model for how fast it can finish the track, but the model seems to think it’s being rewarded for the number of burnouts. Its limited experiences of the world have shown it enough to know that it’s doing something right, but not enough to pinpoint what exactly that something is. So, after earning some points for driving a few feet on the track, the model veers off into the grass to begin its spiraling dance. It has found its first local optimum, one which would require many changes to go from drift king to Formula 1 driver.

If we let this behavior continue, we might find that it learns to drift faster and tighter circles. As the omniscient designers of the environment, however, we know there’s a much more lucrative solution available. In RL, this stems from the exploration-exploitation trade-off. We want the AI to exploit the knowledge it already has gained, but we also want it to explore a variety of potential solutions. It’s a trade-off because these motives directly oppose each other. The model can’t be exploring and exploiting at the same time.

There are many ways we can handle this trade-off2, but the simplest strategy is to force the model to explore some percent of the time. That means hijacking the model’s best judgement and forcing it to take a random action. We start by doing this 100% of the time, and ramp it down as our confidence in what the model has learned increases. Importantly, this plateaus at 5% so that even an expert model has the chance to explore. This simple strategy produces impressive results3:

The complexity of this racing environment pales in comparison to the intricacies of the world we live in, yet there’s much we can learn from our little model. Though we may never notice it, we might be doing some burnouts of our own, making illusory progress towards maximizing our true reward function. The more you see of the world, the clearer your definition of that reward function will be—the one that keeps you motivated, happy, and fulfilled. From time to time, disregard your tendency to exploit what you’re familiar with, and instead, explore what is unknown. Going beyond your comfort zone won’t cut it here. What I mean by exploration is actively disregarding your intuition and moving orthogonal to your judgement. Take a random walk through your city. Talk to someone from another country. Do everything except what you think you should be doing.

It’s not an easy ask. We have no omniscient guide forcing us to explore and choosing our random actions for us, so we rely on our imperfect selves—humans are notoriously bad at generating random numbers4. Disregarding intuition requires a level of meta-cognition (thinking about thought) that few possess. Frankly, I’ve struggled to replicate true exploration myself, but the pursuit of it has improved my understanding of what I value. There will be times where you fall back into long periods of autopilot. It’s your job to disengage it, enter free-fall, and see what landscape lives below the clouds.

p.s. check out my latest project, SOTA Stream, a research paper digest that tailors to your interests

Though it’s common to develop an attachment to your models, reinforcement learning takes this to another level. Almost nothing is guaranteed to work, which makes the successes all the more emotional.

Source Code: https://github.com/JinayJain/deep-racing

Balancing between exploration and stability has been a true struggle of mine. I'm glad you opened up the dialogue about this pressing issue. Let's go orthogonal to our judgment!

- Michael Sheen